Clare Snyder Download CV

I am an assistant professor in the Department of Technology, Operations, and Statistics at NYU Stern. I received my PhD from Michigan Ross where I was advised by Samantha Keppler and Stephen Leider.

Broadly, I am interested in human behavior within artificial intelligence (AI)-enabled service systems. My research shows that worker-algorithm interactions change in response to features of customers in the system, just as worker-customer interactions change when algorithms are introduced to a service, and as algorithms’ impact on customers is mediated by worker behavior.

Research

1. Algorithm Reliance, Fast and Slow.

with Samantha Keppler and Stephen Leider

Management Science.

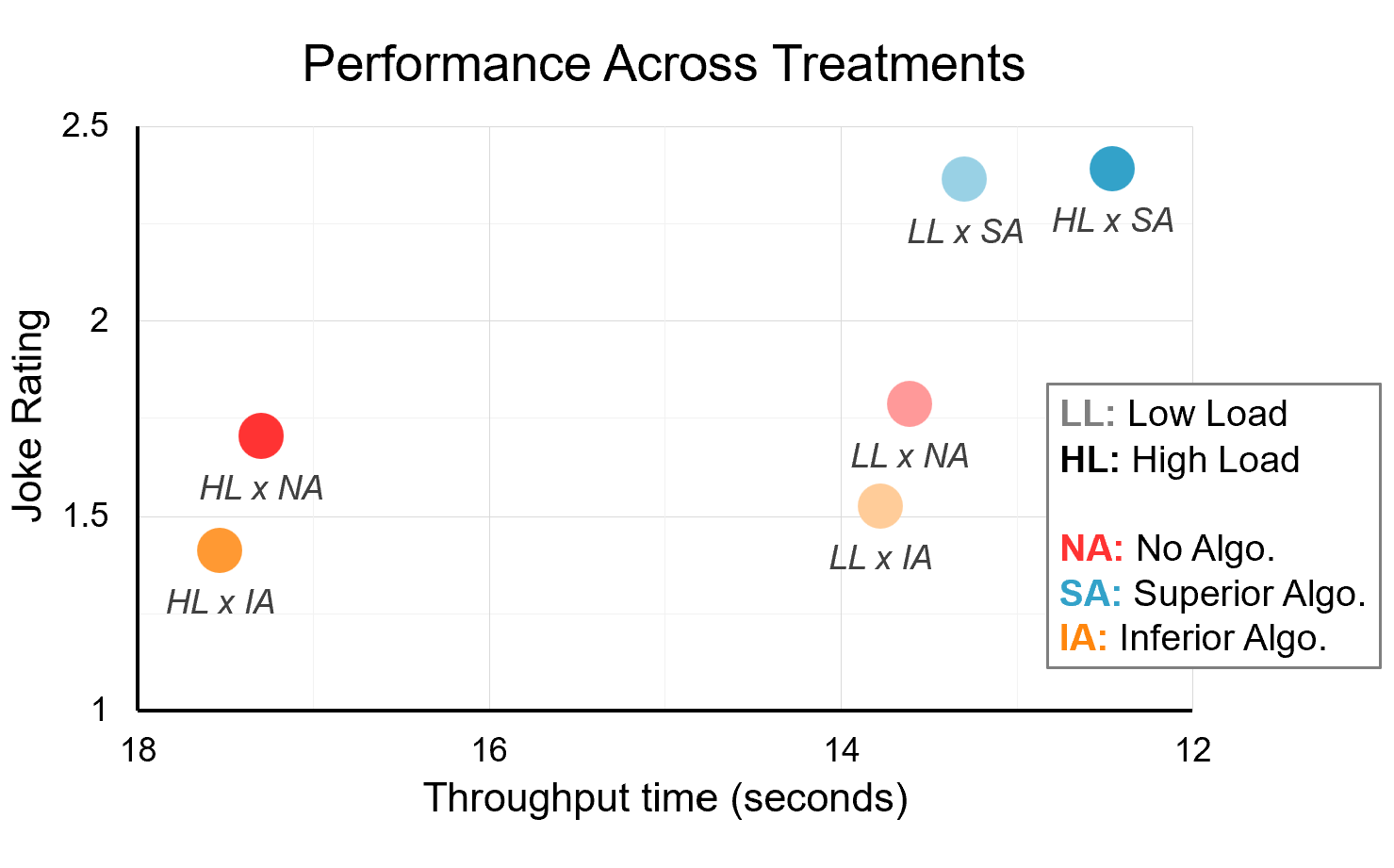

In algorithm-augmented service contexts where workers have decision authority, they face two decisions about the algorithm: whether to follow its advice, and how quickly to do so. How do workers use algorithms to manage system loads from customer queues? With a novel laboratory experiment, we find that participants do not necessarily speed up by using algorithms' advice; their throughput times only decrease compared to the no-algorithm baseline when the system load is high and algorithm quality is superior. Algorithm quality and system load are mutually reinforcing factors that influence both service quality and especially speed.

2024 Service Science Best Student Paper Finalist.

2. Making ChatGPT Work for Me.

with Samantha Keppler and Park Sinchaisri

CSCW, forthcoming.

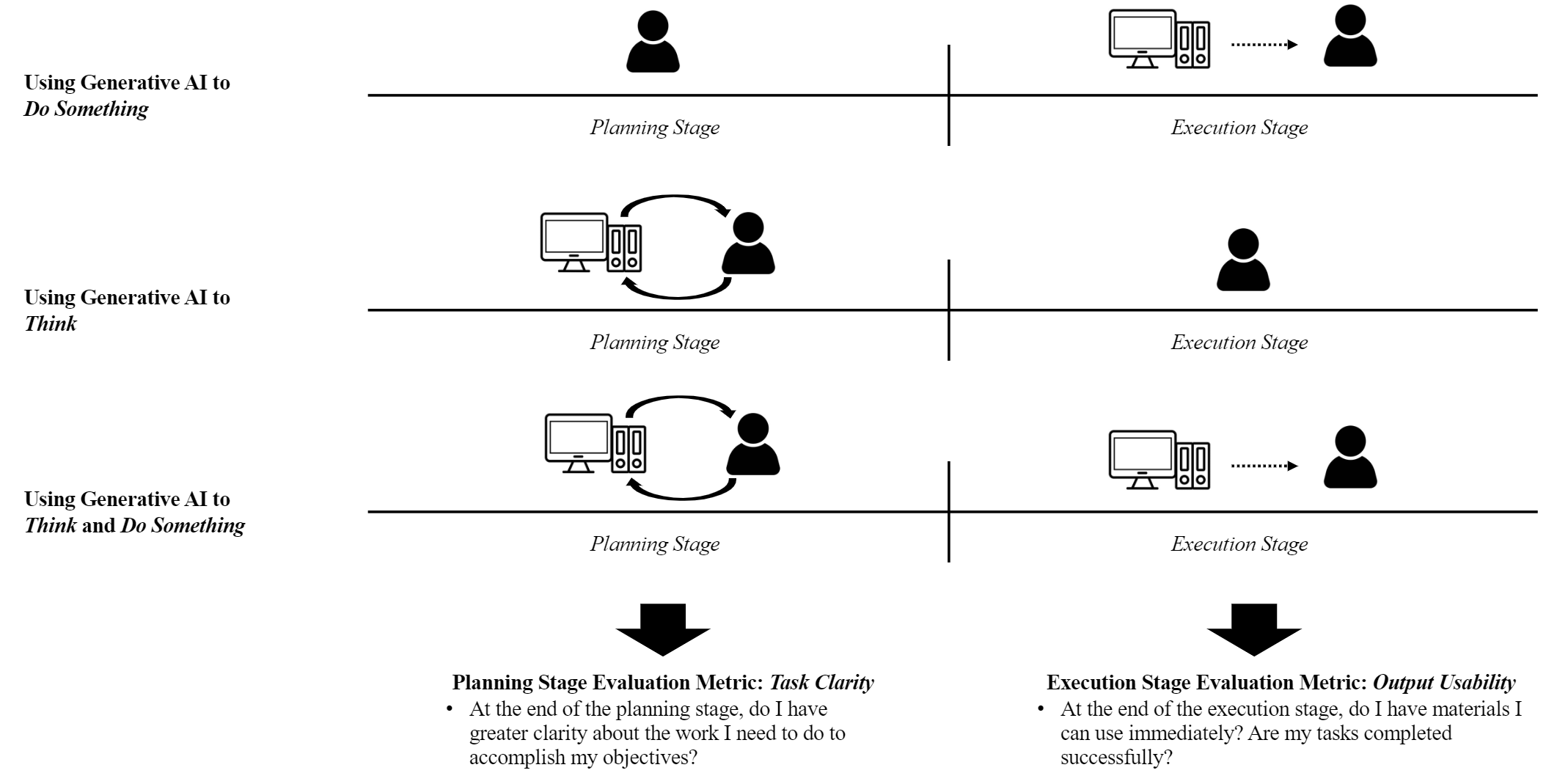

Increasingly, work happens through human collaboration with generative AI (e.g., ChatGPT). In this paper, we conduct a qualitative study of this collaboration for real-life work tasks, with a focus on K12 education. In one-on-one sessions, we observe how teachers use ChatGPT-4 for their tasks. Analyzing 201 prompts inputted by the 24 teachers, we uncover four primary support modes: (1) make for me, (2) find for me, (3) jump-start for me, and (4) iterate with me. The first three modes are requests of genAI to do something, whereas the fourth mode is a request of generative AI to think.

3. Backwards Planning with Generative AI.

with Samantha Keppler and Park Sinchaisri

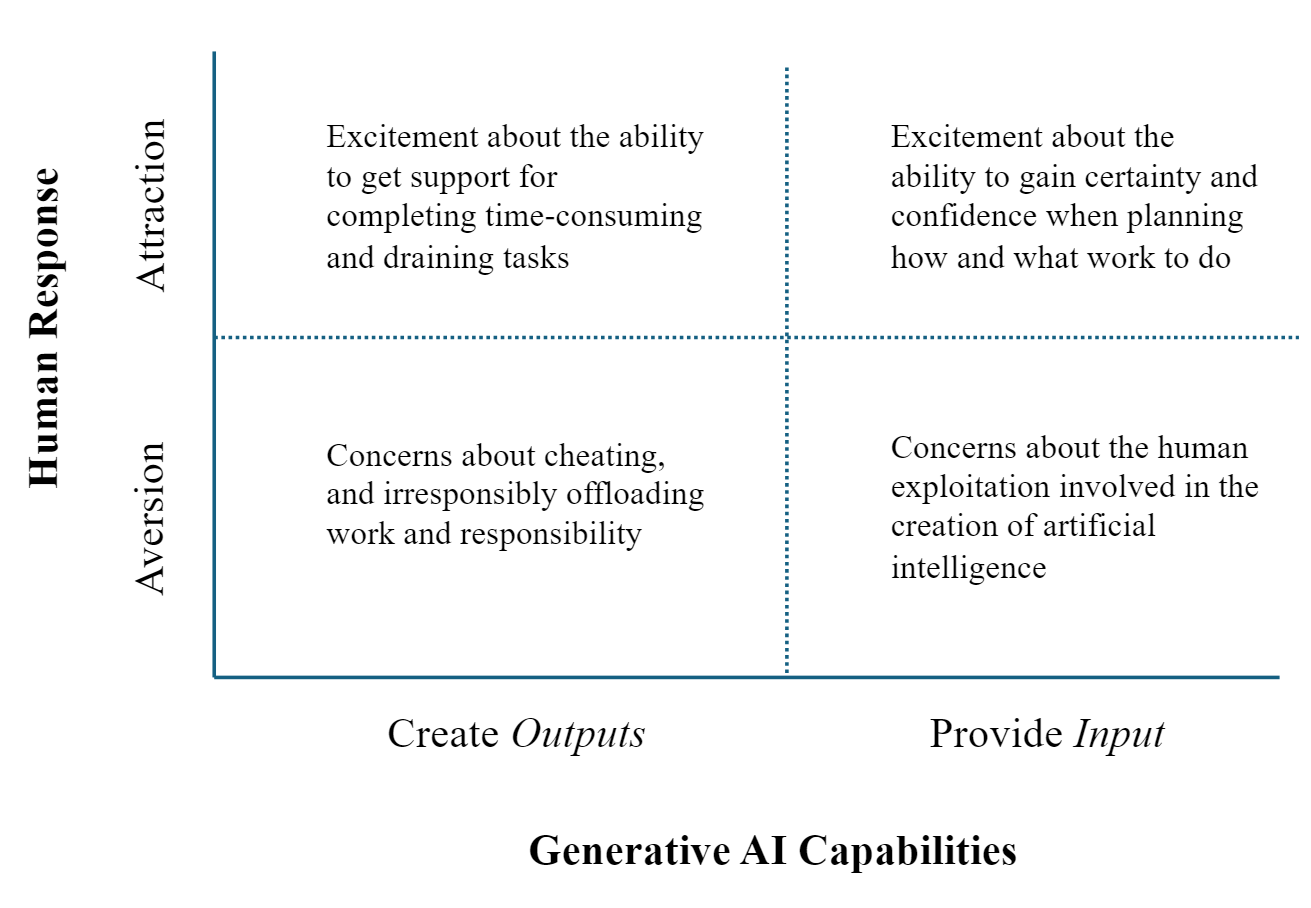

Backwards planning, a universal practice among US K12 teachers, is an effective operational process: work backward from the desired outcome to determine the steps to accomplish it. The emergence of generative AI raises questions about its impact on teacher work. How are teachers using genAI within backwards planning? We study this question with a case study of 24 US public school teachers over the 2023–2024 school year, including interviews, observations, and surveys across the year. By spring 2024, the teachers separated into three distinct groups: (1) those who sought genAI inputs (e.g., advice about lesson plans) and outputs (e.g., quizzes, worksheets), (2) those who only sought genAI outputs, and (3) those who never used genAI. Only teachers in the first group report productivity gains from genAI. Our findings have implications for integrating genAI into goal-oriented workflows.

4. Worker Reactions to (Fair) Algorithms.

with Samantha Keppler and Stephen Leider

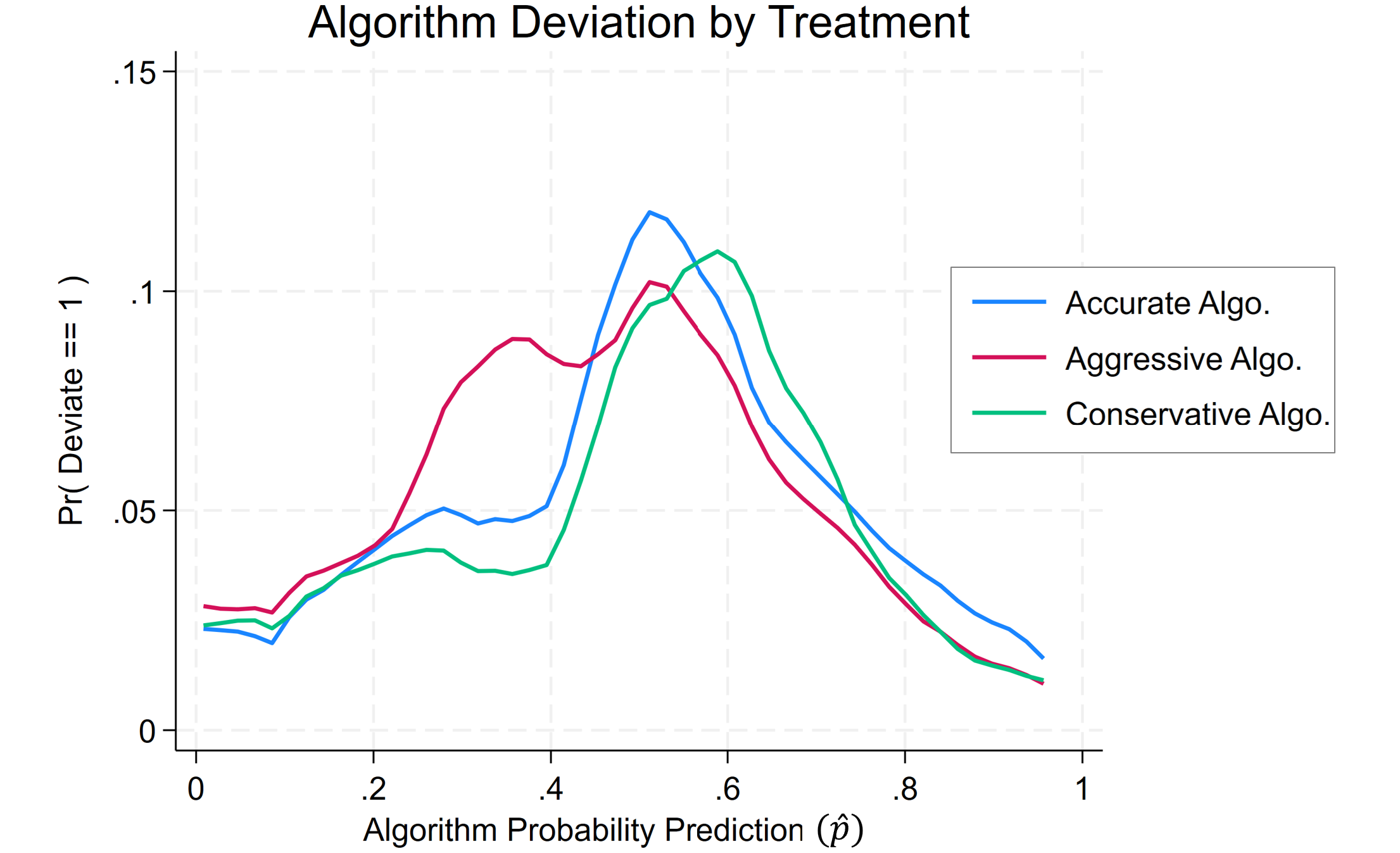

The design of fair AI has received considerable attention, but another stream of research shows that people often under-rely on algorithms. It is therefore possible that when humans have ultimate decision authority, they will deviate from or ignore algorithms’ advice in ways that undermine fairness outcomes. We use laboratory experiments to answer the question: how effective will binary classification algorithms designed for fairness (i.e., equal opportunity) be when they are channeled through a human decision-maker? We find that fairness from human-algorithm interaction is not a convex combination of human fairness and algorithmic fairness. In other words, people are less fair with fair algorithms than they would be without the algorithms. This is because of the way users deviate from fair algorithms' advice.

Teaching

Michigan Ross | Instructor for TO 313, a core BBA course | Fall 2022 | 76 students | 4.8/5 teaching evaluation

Recipient of the 2024 Thomas W. Leabo Fellowship for academic and teaching excellence.